Jay and Ryanne came over to my place and interviewed Jamais a couple months ago.

Here’s the end result:

Category Archives: Interviews

Interview with Electric Sheep Artist Spot Draves – Part 1

|

|

The exhibit features Spot Draves, Georg Janick, Feathers Boa, Bryn Oh, Adam Ramona, Aiyas Aya, Ub Yifu, and Crash Perfect.

(Sponsored by The Wishfarmers.)

Keep an eye on my Node Zero Gallery Category for more interviews with artists all month long.

The interview below is the first of several parts. It took place on January 30, 2008.

Spot: I’ve been programming computers my whole life, and this is the distillation of all of that experience. So yeah, they’re not supposed to look like sheep. They’re not supposed to look like anything at all. In fact, I don’t even really control what they look like specifically, because they are created by this Internet distributed cyborg mind, and they’re created by everybody who’s watching them.

The reason they are called the “electric sheep” is because it’s the computer’s dream, and not just your computer, but like THE computer, like the gaian All computers, on the internet, connected, and all the people behind them, as one entity.

What I did was, I wrote the software, and developed the algorithm. And it’s based on a visual language, which is a space of possible forms. And then, all the computers that are running the software communicate over the internet to form a virtual supercomputer that then realizes the animations. It takes an hour per frame to render.

Now, the one in Menlo park is double the resolution and six times the bandwidth.

Lisa: This is something in RL that people can go in the physical world and see?

Spot: Yes. It’s on a flat panel with a frame around it that hangs on the wall. A 65″ plasma screen.

Lisa: Where does that live?

Spot: The company is called Willow Garage.

I designed the frame and had it built, and had it installed, and that one has some special electronics so that it shuts down when nobody is watching, to save power.

Lisa: So this running off a computer? (We are watching as he projects on to my livingroom wall.) So it’s basically a screen that’s hung on a wall that’s then attached to a computer that’s running the art?

Spot: That’s right. And that one has a terabyte database. This one is 100 gigabytes.

Lisa: So it’s always generating new art? Or is it sort of recycling through?

Spot: No. What it does is this. See, because it takes an hour to render each frame, and there are 30 frames per second and so this is far from real time. I mean, what is that, a factor of 100,000? So you can’t generate it in real time, and that’s part of the inspiration for the virtual worldwide supercomputer.

Lisa: Things the “hive mind” has already created.

Spot: Yes.

Lisa: A snapshot, if you will?

Spot: Yes, and then I edit it. Let me tell you more about the process, which is multifarious and complicated. The bottom line is that it all gets stored in a video graph, which is on the computer, and played back. It’s in 1000 pieces that play back in a non-repeating sequence. So it’s infinitely morphing, and non-repeating, but you do have refrains. So images, sheep, do come back, but then after you see a sheep, it will go and do something else.

So like, watching the video, there’s an algorithm that is running live, as you watch it. But the algorithm is like walking in a garden. Ya know, like an english garden with paths? As you walk along the path you see pretty flowers, and then you come to an intersection, and you have your choice of which path to take next. And so, more or less, if you wander at random, you will come back and walk the same path twice, and see the same thing twice, but, then you’ll go and you’ll do something different. So, that’s cool because there are some parts of the garden which are really remote, and the only way to get to them is by a certain sequence of turns, and so there are some sheep which only appear extremely infrequently, like, ya know, the rare, special ones and so, in order to see the whole thing, you’d have to watch continuously for months.

So, this one, in this 100 GB one, there are 1000 clips. If you played those clips/sheep (I’m sort of switching back and forth on what they are called), if you played them all end to end, it would be like 18 hours. So as far as a human’s concerned, it’s infinite.

Lisa: You went to Carnegie Mellon right?

Spot: Oh yeah – It really affected me. That’s Hans Morovec‘s Homeland. I was really immersed in those ideas when I was a student.

Lisa: You were a student in Artificial Intelligence there?

Spot: Well, I studied metaprogramming and the theory of programming langugages. So, what I did was I created languages for creating visual languages for doing multimedia. Basically, a special programming technique for doing multimedia processing — like real time video 3-D computer graphics, with audio, and in particular, in a feedback loop with a human being.

And so, I didn’t create a language. I created a language for creating languages, because I wanted to make it easier for everybody to create their own language. And so you’ll get these towers of languages, and it’s almost, basically like virtual reality, where you have realities within realities, where you can have languages within languages.

Lisa: And they all fit into the same architecture?

Spot: Yeah. So you can analyze these things coherently, and you can create programs which process and optimize these structures.

The genetic code is now in XML. The language, was the key innovation.

Lisa: Wow. What did you use before the XML? What else were you doing that with?

Spot: Before XML, for this genetic code, I just had some stupid text format which I made up myself.

Lisa: Ah. I see. But it was hard to do the kind of architecture you described, without having XML right?

Spot: Oh well that stuff I did more with LISP. Scheme in particular. Scheme is just a version of LISP.

Lisa: Interesting…

Spot: So the visual language is the genetic code. It’s the mapping from the genotype to the phenotype. And so each of the sheeps has virtual DNA that controls how it looks and how it moves. And everything you see is an expression in that language. And then, it’s a continuous language. It has a lot of special properties, because it was designed to be able to do this. It’s made with floating point numbers, and part of the idea behind this whole thing is that life and its existence is continuous.

To be continued…

This post and all the art in it is under the same Creative Commons Attribution 2.5 Generic license, as is all of Spot‘s art.

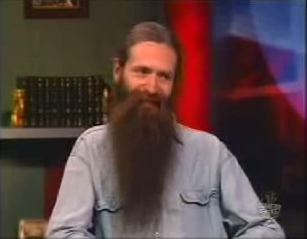

Video of Aubrey de Grey on Colbert Report

Wow my worlds collide again. I just met Aubrey last October.

Now, he’s on the Colbert Report.

(sidenote: I have an 45 minute interview of my own with him that I’ll be putting up soon, but I really wanted to present it just the right way. I told him I wanted the “homer simpson version” of what the hell he was talking about, and by golly, I think he gave it to me

Stephen’s skepticism actually does a really nice job of framing Aubrey arguments too! Nice work Stephen!

You can find out more by picking up a copy of Aubrey’s book too.

Learn more about Aubrey’s SENS platform.

All hail The Colbert Report.

Of Mice and Mitochondria… Applying AI to Bioinformatics to Cure Disease – Interview with Ben Goertzel – Part 1

|

I tracked Ben Goertzel down at the Virtual Worlds conference on October 11, 2007. Ben’s company, Biomind, attempts to address the current disconnect between three different scientific communities that really need to work together in order to find answers: biologists, bioinformaticists and the artificial intelligence community. As a result of this disconnect, biologists are not making the most out of their research data. |

After talking to him an hour about the Novamente Cognition Engine that will soon be used in conjunction with Second Life avatars and an HTTP proxy created by the Electric Sheep Company to train dogs in Second Life, Ben casually mentioned a couple of examples of the technology of his other company, Biomind, that “blew my little (bio)mind” when I learned about what its software has been learning.

We’ll get to training dogs in Second Life soon, I promise. But this, arguably, is a little more time sensitive, so I wanted to tell you about it first.

Ben: Well here’s my vision – the vision I had in 2001 and 2002 when I got the idea to start Biomind. I could see that experimental biology technology was advancing like crazy, but biologists’ ability to understand the data produced by their machines just wasn’t keeping up. To put it simply, “What if we could feed all this data into an AI and have the AI really understand it, and produce the biological and medical answers we need?”

By this time, biologists have discovered an incredible amount of data that’s barely understood, and a decent fraction of it is online. Talking just about the data that’s already there online, right now – my feeling is that if you were to feed it all into a big database, and let the AI analyze it, and see what discoveries it comes up with, you could discover all sorts of cures to diseases. – Ben Goertzel, Biomind/Novamente

Biologists are great at running experiments, but typically they’ll gather a huge mass of data from an experiment and then analyze it in a fairly simplistic way, one that only mines 1% of the information available within the data they’ve gathered. Then they’ll take this 1% of information and use it to design another experiment. What they’re doing works, but not as fast as would be possible with more intelligence applied to interpreting the data the machines spit out.

There are also some specific shortcomings in the standard data analysis procedures they use – especially in regard to the understanding of biological systems as whole systems. The standard data analysis methods biologists use are biased toward zooming in on one gene, one protein, one mutation that makes a difference. AI methods have a lot more capability to understand the interactions between different parts of a biological systems – and it is these interactions that really make life LIFE!

But these interactions are complicated to understand – if I look at the spreadsheet of data coming out of some modern biological equipment (say a microarrayer) I can’t see the biological system dynamics in all those numbers … and conventional data analysis methods can’t usually see them either. But AI methods can look at the reams of messy, noisy data and pick out some really important glimmers of the holistic biological system underneath.

Lisa: Isn’t this the same problem that bioinformatics is trying solve?

Ben: Yeah, bioinformatics is the discipline that deals with crunching bio data. And it has obviously made huge advances in the last decade. But when you really get into it, almost everyone trained in bioinformatics recently is really trained in very specific stuff – for instance, gene sequence analysis. However, bioinformatics training doesn’t include much about data mining, mathematics, or advanced statistics. So the bioinformaticists’ training isn’t much more advanced than that of the biologists, by and large, in terms of mining the complex interactions and dynamics out of reams of experimental data.

I mean, at Biomind we’ve been doing specific work along these lines for a while. In a recent experiment, we took three data sets off the web, which are about mice under calorie restriction diets, fed those three data sets into our AI system, and then analyzed them to find out what genes are most important for distinguishing calorie restriction from control. We were able to pinpoint a couple of genes that no one has ever thought were important for calorie restriction before, but I’m quite certain they are. And this is just from three data sets! I mean, if you could feed in tens of thousands of data sets. Even those related to varios aspects of aging and aging-related diseases…

Now, if Biomind was richer, we’d follow this analytic work up with some wet lab work. Mutate those genes in mice. Make some mutant mice. Give some of them a calorie restriction diet, and see what happens. As it is, Biomind is not rich. So we’ll just publish a paper and hope that somebody else picks up on it, or maybe partners with us in the future.

On another note, when we worked with the CDC a couple years ago, our AI crunched some of their mutation data gathered from people with Chronic Fatigue Syndrome, and what came out of it was the first-ever evidence that there is some sort of basis for CFS. That paper made a pretty big hit in the CFS community.

And we’ve done some great work with Davis Parker from the University of Virginia, on understanding Parkinsons and Alzheimer’s disease based on heteroplasmic mutations in mitochondrial DNA..

Lisa: When did you do that work?

Ben: The Parkinson’s work was in 2004. The Alzheimer’s work is current research, but the preliminary results are pretty exciting, and the preliminary indications are that Alzheimers works basically the same way as Parkinsons, but with different genes and mutations involved.

Part One of Two

References for Ben Goertzel Articles and Interviews

Frank Zappa On David Letterman

This is just cool:

Frank Zappa on David Letterman.

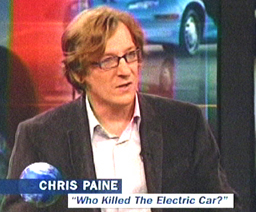

Chris Paine On The Daily Show

|

I knew Chris Paine way back in 1993, when I was working with him on Telemorphix’s 21st Century Vaudeville.

He was a really sweet, smart, and very creative guy with big ideas about everything. Nice to see him pull it all together to create his little masterpiece, Who Killed the Electric Car?. |

Chris Paine On The Daily Show (Quicktime, 15 MB)

Dabble Record for this interview

Markos Moulitsas Zuniga On The Colbert Report

Daily Kos dude Markos Moulitsas Zuniga cruised through the Colbert Report last week. (April 6, 2006, I think)

Video-Markos Moulitsas Zuniga on the Colbert Report (12 MB)

Audio-Markos Moulitsas Zuniga on the Colbert Report (MP3 – 8 MB)

He’s got new book out called

Crashing the Gate

My Interview With The All Camera Phone Music Video Director Grant Marshall

It’s my first new post for O’Reilly’s Digital Media website:

How To Shoot Broadcast Quality Music Videos On A Camera Phone

This is a pretty neat story from beginning to end — a lesson in what can be accomplished when someone sticks to a vision and sees it through to the end. Right on to Blast Records and The Presidents of the United States of America (P.U.S.A.) for taking a chance too!

Check out the Making Of video that Grant let me host, too.

This development is more than a novelty. It’s a working demonstration of the natural artistic progression towards the integration of new mobile video technology within existing art forms.

I predict that it will soon be commonplace for bands to shoot mobile phone-based video of interviews, practices, performances, songs-in-progress, or whatever, and post it to their websites…

Mobile phone cameras can only record at 1/3000 of standard broadcast quality, and don’t capture movement very well. In addition these phones only recorded at 10 frames per second, even though the manufacturer had promised that they’d record at 15 fps.

During the shoot, the phones were so temperamental that they’d just turn off at any point without warning, so Grant had the band play the song 24 times at half speed in order to provide enough footage to edit together one good take of the song.

Howard Dean On Meet The Press

This is from May 22, 2005.

Video – Howard Dean On Meet The Press – Parts 1-6

Audio – Howard Dean On Meet The Press – Parts 1-6

My Interview On Junket 415 – On Mondoglobo.net

This is from September 22, 2005. I was interviewed on

Junket 415 — another of Mondoglobo.net‘s cool podcast shows.

Here’s a

direct link to the show. (My interview starts at 14:23.)